This article is part of my series, “What UX Courses Didn’t Teach Me (But Real Research Did).”

Over the next few days, I’m sharing five essential UX research skills that I didn’t fully learn from certifications or bootcamps—only from real-world projects, messy data, and unexpected conversations with users.

Each day, I’ll break down one skill, the lesson behind it, and what actually makes a difference when you’re practicing UX research in the wild.

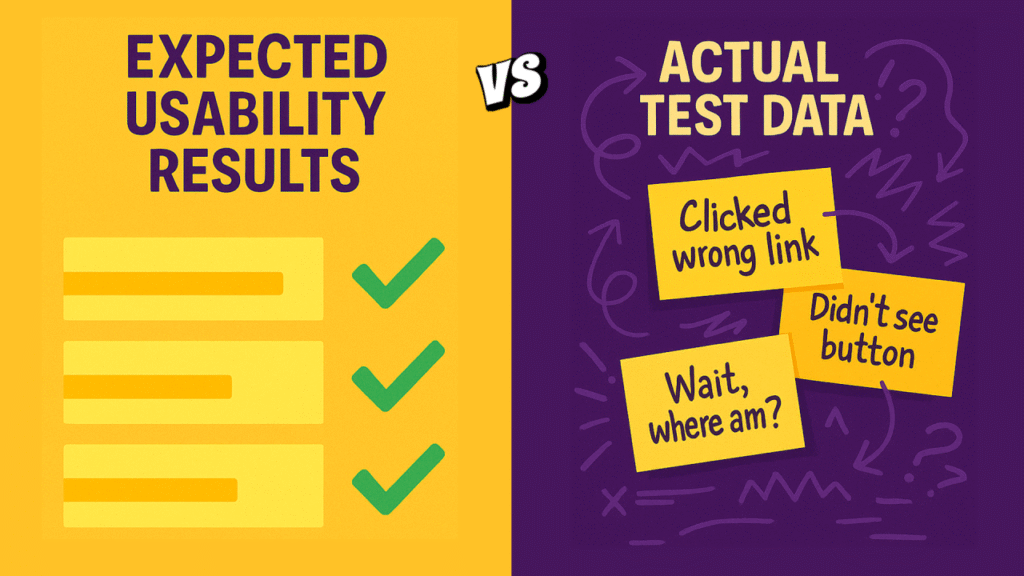

Skill #3: Running Usability Tests vs. Managing Messy, Conflicting Data

Usability testing seems clean and logical when you first learn it. Write tasks. Watch behavior. Capture insights. Reality? It’s far messier—and far more human.

Usability testing may seem straightforward: write clear tasks, set up a script, observe user difficulties, and capture insights. However, in practice, clarity and consistency are often lacking.

Participants frequently misunderstand tasks, giving one-word answers like “fine” or “okay,” even when they seem stuck. They may also contradict themselves by claiming they completed a task while demonstrating otherwise.

Success is often achieved through unexpected navigation routes, skipping “obvious” shortcuts, or stumbling upon solutions instead of following a planned flow.

Defending the data becomes challenging when results don’t match stakeholders’ expectations. I must back up my insights with direct evidence, such as screenshots, session recordings, and quotes.

Real usability data is messy and unpredictable. Good researchers translate this chaos into actionable clarity.

Next, I’ll talk about the hardest lesson I learned about sharing research findings: finding patterns is easy—getting people to care about them is the real work.