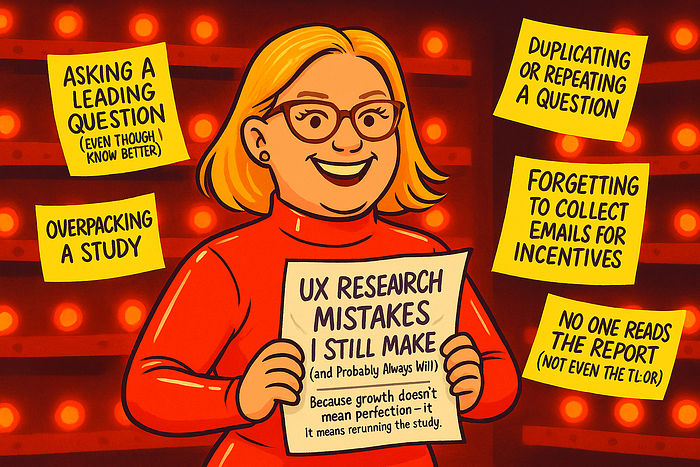

Because growth doesn’t mean perfection — it means rerunning the study.

After years of running studies, writing reports, and trying to advocate for UX research at a company where it was still relatively new, I’d love to tell you I’ve perfected my process.

But the truth? I still mess things up.

Sometimes, I ask a leading question I thought I’d outgrown. Sometimes, I forget to collect emails for incentives. And sometimes — despite my beautifully crafted TL;DR — no one reads the report.

And I’ve learned… that’s okay.

These mistakes don’t make me a bad researcher. They make me a human one.

So here are 5 UX research mistakes I still make (and probably always will) — and what they’ve taught me along the way.

Mistake #1: Asking a Leading Question (Even Though I Know Better)

I’ve spent much time teaching others how to avoid leading questions. I know how they bias responses and shape user behavior. I know better.

And yet…

Midway through a session, I’ll hear myself ask something like, “Did you find that helpful?”

The second the words are out, I’m already kicking myself.

It usually happens when I try to keep the session moving or low-key, hoping they “got it.” But leading questions aren’t about malice — they’re about comfort. They sneak in when I’m rushing, tired, or trying to reassure the user.

Lesson: Experience doesn’t make you immune to bad habits. But it does make you faster at catching them — and recovering at the moment.

Now, when it happens, I don’t panic. I’ll rephrase, backtrack, or let the next question clarify things. I’ve learned to listen to those moments and adjust in real time.

Because it’s not about being flawless — it’s about being aware.

Mistake #2: Overpacking a Study

When planning a study, my brain goes straight to:

“TEST ALL THE THINGS!!”

It starts with good intentions.

We’ve got limited time with users, so why not validate the new nav, test the homepage redesign, compare three button labels, and maybe sneak in a modal flow while we’re at it?

The result? A bloated script, overwhelmed users, rushed tasks, and feedback that barely scratches the surface.

Every time I do it, I regret it. The session feels chaotic, the insights are muddy, and synthesis becomes frustrating in separating noise from what actually matters.

Lesson: Nothing gets the depth it deserves when everything is a priority.

Now, I scope ruthlessly. If something has to wait for the next round, it waits. Focused studies not only give me better insights, but they also build more trust with stakeholders who get clear, actionable takeaways.

Mistake #3: Duplicating or Repeating a Question

There’s nothing like that moment mid-session when a participant says, “Didn’t you already ask this?”

And I realize… yes. Yes, I did.

It usually happens when I’m trying to work fast. I duplicate a question to keep the format consistent, intending to update the wording later, and then I miss it. Sometimes, I catch it during a preview. But sometimes? I don’t. And a very observant participant calls me out mid-study.

It’s not a huge mistake, but it can throw off the flow, make the session feel less thoughtful, or even undermine participant trust.

Lesson: Clear structure beats clever wording. Always do a logic and flow check before hitting the record.

Now, I read my scripts out loud. I walk through them like I’m the user. If I hear myself repeating ideas or asking the same thing twice, I know it’s time to tighten up.

Because if I’m confused while reading it, my users will definitely be confused.

Mistake #4: Forgetting to Collect Emails for Incentives

The sessions are in, and the feedback is solid. You’re getting ready to send incentives when you realize…

There’s no contact info.

This usually happens when I reuse a study I originally ran with general panel participants and later open it up to our actual customers. The platform doesn’t require email collection for panel users — but when customers participate, I need a way to follow up.

And suddenly, I don’t have one.

What was meant to be a seamless, efficient process turned into a last-minute scramble to track down participant information — not exactly the experience I wanted to deliver.

Lesson: When reusing studies, review every step through the lens of the current audience — including incentive logistics.

I always double-check the screener or wrap-up questions before relaunching a study for customers. If we send a thank-you, their email needs to be part of the flow — no assumptions, no do-overs.

Mistake #5: No One Reads the Report (Not Even the TL;DR)

You spend hours writing a thoughtful research report. You organize your insights, embed video clips, highlight pain points, and — to make things easier — add a clean, scannable TL;DR at the very top.

And still… someone asks in the meeting:

“Wait, did we test this?”

It’s one of the more deflating moments — not because people ignore the work, but because it reminds me that even the clearest report can go unread if it’s just dropped into Confluence and walked away from.

Lesson: A report isn’t the end of the process — it’s a conversation starter. And sometimes, you need to talk.

Now, I don’t just share reports — I schedule time to walk through them. I present findings in formats people actually engage with, like visuals and short videos, then open the floor for questions and real-time discussion.

Because the value of research isn’t in the report — it’s in what people do with it.

Conclusion

The longer I do this work, the more I realize that UX research isn’t about perfection — it’s about awareness, reflection, and rerunning the study when something doesn’t work.

Mistakes still happen. Some are small. Some are frustrating. Some I’ll make again.

But each one makes me better.

So, if you’re still catching yourself repeating questions, overpacking studies, or wondering if anyone actually read your report, you’re not alone.

You’re learning. You’re adapting.

And you’re doing the work that matters.